You wouldn’t hand over your portfolio to a stranger who speaks confidently but has never managed a dollar. So why are companies outsourcing their forecasting and strategic decision-making to large language models (LLMs) — tools that sound smart but lack context and true understanding? Some possible answers point to the stress, the nuance, the need for speed, and the fact that there might be 200 different models for a specific problem to solve. The challenge arises when these circumstances push people to blindly trust AI for the answer. A surgeon with more than 1,000 surgeries knows exactly which tool to pick for a surgery, based on their vast experience. Due to the physical nature of their work and industry regulations, they cannot outsource this highly cognitive task to AI. But statistical and machine learning experts live in the digital world and are more easily subsumed by novice AI models, even when cases where AI usage went wrong abound. For instance, recent reporting shows the limitations and real-world consequences of using AI to value real estate.

A Goldman Sachs report warning about the potential for AI to disrupt labor markets illustrates the expected uneven impact across different functions, with about a quarter of jobs affected overall in the US and Europe. A disproportionate number of those impacted will be white-collar and administrative roles. On the other hand, manual and non-routine roles are predicted to face the least amount of AI disruption.

This predicted disruption contextualizes many companies’ eagerness to jump into these new technologies out of impulse and without a clear business purpose. A recent article by Gartner states that over 40% of Agentic AI Projects Will Be Canceled by the End of 2027.

“Most agentic AI projects right now are early-stage experiments or proof of concepts that are mostly driven by hype and are often misapplied. This can blind organizations to the real cost and complexity of deploying AI agents at scale, stalling projects from moving into production. They need to cut through the hype to make careful, strategic decisions about where and how they apply this emerging technology.” Anushree Verma, Senior Director Analyst, Gartner.

The business press has covered the struggles companies face in deriving value from AI. An article from S&P Global states that 42% of respondents abandon their AI initiatives before they reach production (up from 17% the previous year). Surveys such as this suggest that AI is not moving the needle on business performance in a way that matches the hype.

This is not an anti-AI stance; it is a warning against the blind application of AI to problems it is not built to solve — like statistical forecasting, judgment-based trade-offs, or risk modeling.

A surgeon wouldn’t use a shovel to perform surgery, but that is exactly what’s happening in business: LLMs are being thrown at modeling problems without proper expert oversight simply because they’re new, available, and are promoted by effective marketing campaigns.

This isn’t innovation. It’s costing companies time, trust, and a strategic edge.

Why is this happening? It’s simple. Budgets are tighter. Timelines are faster. Expectations are higher. AI is seen as the savior, and technical nuance is often ignored in favor of “good enough.” But here's the problem: when you're betting millions, “good enough” doesn’t cut it. The most successful firms in history didn’t get there by taking shortcuts. They minimized risks, played a long game, and listened to experts to develop repeatable outcomes.

Forecasting, statistics, and judgment? Those are not tasks to be thrown into the stochastic blender of a language model trained to autocomplete. They are problem-solving tasks that need to be context-aware. Let’s reframe the problem by discussing where AI truly adds value — and where human common sense must remain non-negotiable. Because if your AI strategy doesn’t start with judgment, it ends in delusion.

When it comes to forecasting, AI assists—humans lead

The potential to develop better forecasts with AI exists, but only if humans are at the forefront. Combining the expertise of highly skilled and trained practitioners with the leverage of AI-enhancement offers a substantial comparative advantage to surpass the short-term vision of the AI-led, easy-way-out alternative.

A strong forecasting team is small, with one senior subject matter expert (SME) at fractional time working with one or two junior analysts. The SME should lead the higher-level strategic decisions, while junior analysts manage AI tool deployment, support methodological discussions, and build their skills as ML practitioners.

Low cognitive tasks will be upended by a new AI start-up every 6 months. Beware of claims that AI is a button to press to fix the business problem (automate out of existence), which will become cheaper and faster every year. Lesson: do not play this game, stay creative!

We argue for three key players in successful forecasting implementations.

- The industry expert focuses on extracting value from forecasting within the business. For example, they should know how forecasts relate to inventory decisions and opportunity costs from lost sales. After all, every future decision in a business relates to a forecast.

- The forecasting expert translates the latest scientific discoveries into practice. As problem solvers with deep knowledge of research and past forecasting model failures, they know when AI should be applied and when it shouldn’t. They link the business’s needs with careful statistical modeling. Pairing an industry expert (or client) with the forecasting expert to codevelop solutions is the best formula for success.

- Junior resource(s) perform the bulk of the work, learning and developing as they collaborate alongside senior experts. They actively use AI to enhance or replace low cognitive tasks while taking on higher cognitive tasks as they develop into strategic resources for the firm.

Teams configured in this way can develop an effective approach to capturing patterns in the underlying data and predicting future outcomes. In the last 20 years, the goal was to maximize the accuracy of predictions of future responses. However, a shift in recent years has AI/ML models overfitting on the past, resulting in high-profile accuracy failures that lack explainability, a non-starter in high-value forecasting challenges (see the previously referenced home price forecasting example, where the issue stemmed from a lack of established causality between the inputs and outcomes – in other words, the patterns of the past were not repeatable).

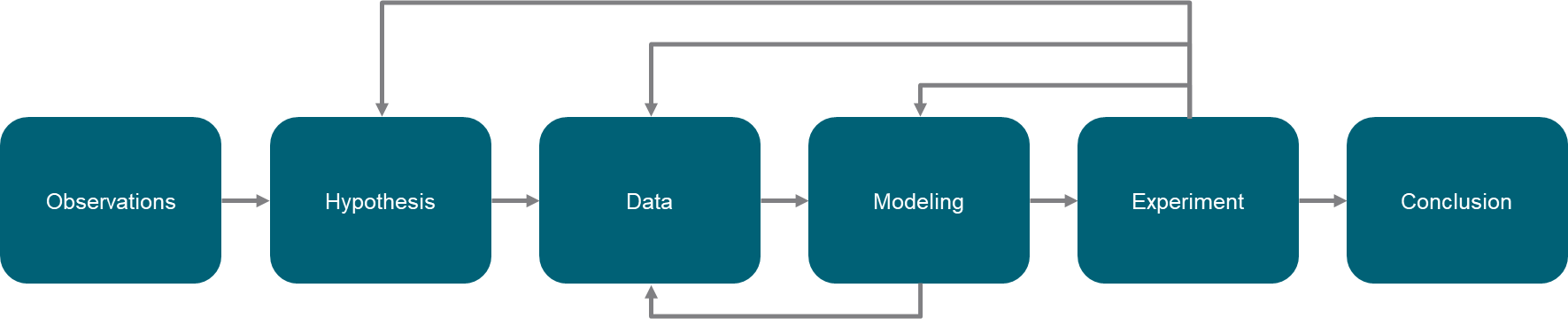

Our approach combines our industry knowledge with our forecasting expertise to identify future patterns that are the most repeatable. We also attempt to identify patterns that are not repeatable or those that may be disrupted by changes in the macroeconomic environment, such as tariffs or COVID. By not overfitting on the past, we’re more likely to see a return on investments in the future. In essence, our approach aligns with the Scientific Method:

Observation --> Hypothesis --> Data --> Experiment --> Conclusion

Testing and validation are critical. We test a hypothesis (data pattern) before we suggest our clients invest in it.

Here’s how we put these practices into action:

In a recent engagement, we helped our client automate part of their financial monthly close using AI, delivering results in just five weeks. Our seasoned industry experts, forecasting specialists, and the client’s team worked together to build a solution that improved strategic decision-making across 200+ revenue streams. The AI-driven forecasting tool helped identify seasonal revenue increases and important trends to improve strategic decision-making, having an influence on 300M USD of revenue. Issues with problematic revenue streams were flagged, equipping the CFO to act proactively and enhance future revenue estimates. The CFO praised the team for solving a strategic and analytically challenging issue they had struggled with for five years.

Common sense: The unbeatable advantage

AI is an amazing tool, with a myriad of virtues. It also has some substantial shortcomings, which call for caution in its use and adoption. One of these shortcomings lies at the heart of our firm’s core values: AI lacks common sense. It is not context aware without expert prompting; it struggles with the nuances of real-world problems; it doesn’t grasp abstract concepts, but instead repeats learned patterns that can be implemented by anyone for cents on the dollar. Thus, the credibility of experts and establishing client trust are critical for the adoption of AI in forecasting. Accountability is a key part of the equation; ChatGPT can’t be blamed for a forecast gone wrong, but humans can.

We’re at a pivotal moment with the potential of AI to reshape business outcomes and the economy in general, and it’s up to us to make the right decisions. Pace is important, timing is paramount, and a careful pondering of risks and benefits is called for. As AlixPartners Co-CEO Rob Hornby puts it:

“We are certainly at the beginning of an AI automation revolution that will assume many work tasks and eventually redefine organizations. This will be gradual but ultimately transformational. Right now, leaders should focus on solving specific business problems using machine learning and generative AI for the three Cs of content, coding, and conversation. Some labor can be saved, but new skills will also be needed. For most companies, being a fast follower is enough, and executives should require evidence of proven benefits elsewhere before they invest.”

Rob Hornby, Co-CEO, AlixPartners